The NVIDIA accelerated computing platform is main supercomputing benchmarks as soon as dominated by CPUs, enabling AI, science, enterprise and computing effectivity worldwide.

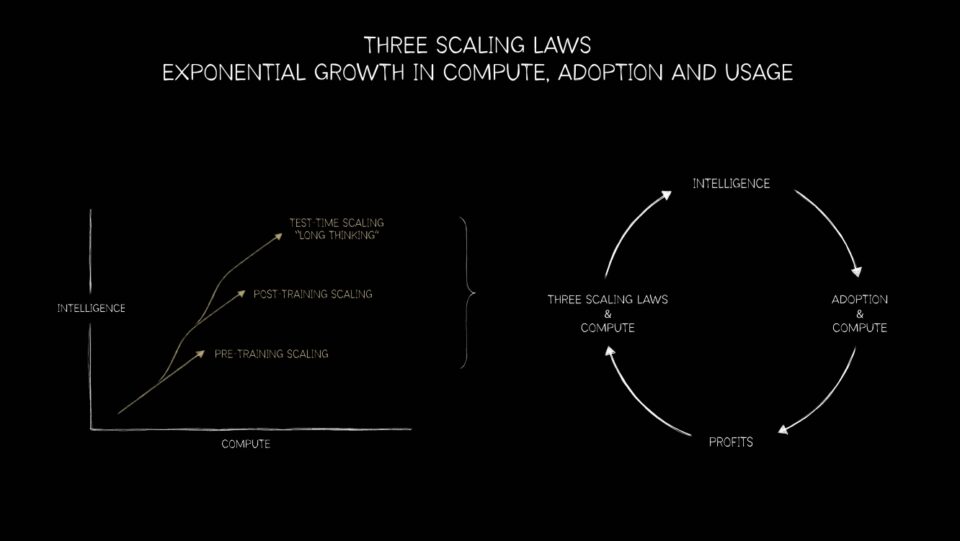

Moore’s Legislation has run its course, and parallel processing is the best way ahead. With this evolution, NVIDIA GPU platforms are actually uniquely positioned to ship on the three scaling legal guidelines — pretraining, post-training and test-time compute — for the whole lot from next-generation recommender methods and huge language fashions (LLMs) to AI brokers and past.

The CPU-to-GPU Transition: A Historic Shift in Computing ?

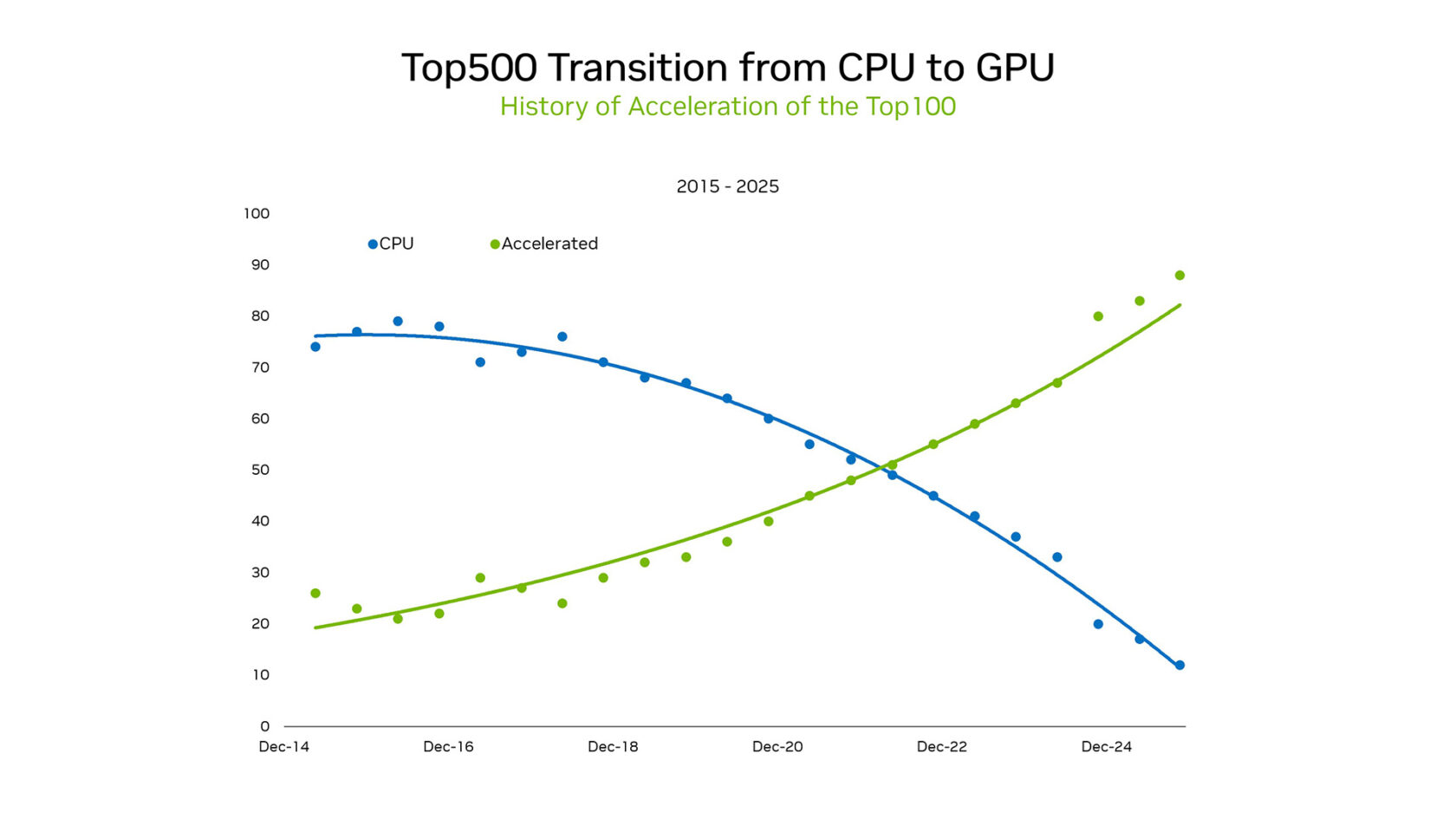

At SC25, NVIDIA founder and CEO Jensen Huang highlighted the shifting panorama. Inside the TOP100, a subset of the TOP500 listing of supercomputers, over 85% of methods use GPUs. This flip represents a historic transition from the serial?processing paradigm of CPUs to massively parallel accelerated architectures.

Earlier than 2012, machine studying was primarily based on programmed logic. Statistical fashions have been used and ran effectively on CPUs as a corpus of hard-coded guidelines. However this all modified when AlexNet operating on gaming GPUs demonstrated picture classification might be discovered by examples. Its implications have been huge for the way forward for AI, with parallel processing on growing sums of information on GPUs driving a brand new wave of computing.

This flip isn’t nearly {hardware}. It’s about platforms unlocking new science. GPUs ship way more operations per watt, making exascale sensible with out untenable vitality calls for.

Current outcomes from the Green500a rating of the world’s most energy-efficient supercomputers, underscore the distinction between GPUs versus CPUs. The highest 5 performers on this business commonplace benchmark have been all NVIDIA GPUs, delivering a median of 70.1 gigaflops per watt. In the meantime, the highest CPU-only methods supplied 15.5 flops per watt on common. This 4.5x differential between GPUs versus CPUs on vitality effectivity highlights the huge TCO (whole price of possession) benefit of shifting these methods to GPUs.

One other measure of the CPU-versus-GPU energy-efficiency and efficiency differential arrived with NVIDIA’s outcomes on the Graph500. NVIDIA delivered a record-breaking end result of 410 trillion traversed edges per second, inserting first on the Graph500 breadth-first search listing.

The profitable run greater than doubled the subsequent highest rating and utilized 8,192 NVIDIA H100 GPUs to course of a graph with 2.2 trillion vertices and 35 trillion edges. That compares with the subsequent greatest end result on the listing, which required roughly 150,000 CPUs for this workload. {Hardware} footprint reductions of this scale save time, cash and vitality.

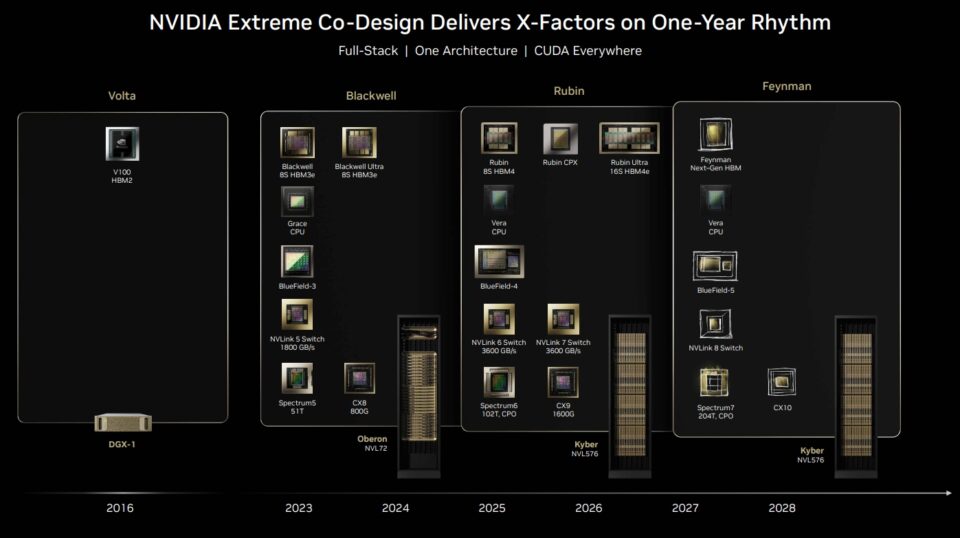

But NVIDIA showcased at SC25 that its AI supercomputing platform is excess of GPUs. Networking, CUDA libraries, reminiscence, storage and orchestration are co-designed to ship a full-stack platform.

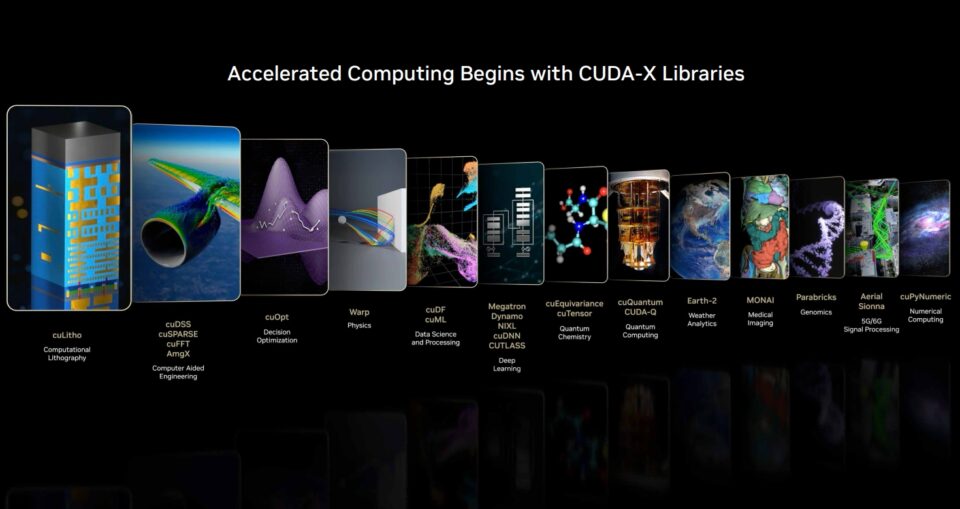

Enabled by CUDA, NVIDIA is a full-stack platform. Open-source libraries and frameworks comparable to these within the CUDA-X ecosystem are the place huge speedups happen. Snowflake just lately introduced an integration of NVIDIA A10 GPUs to supercharge knowledge science workflows. Snowflake ML now comes preinstalled with NVIDIA cuml and cuDF libraries to speed up well-liked ML algorithms with these GPUs.

With this native integration, Snowflake’s customers can simply speed up mannequin growth cycles with no code modifications required. NVIDIA’s benchmark runs present 5x much less time required for Random Forest and as much as 200x for HDBSCAN on NVIDIA A10 GPUs in contrast with CPUs.

The flip was the turning level. The scaling legal guidelines are the trajectory ahead. And at each stage, GPUs are the engine driving AI into its subsequent chapter.

However CUDA-X and plenty of open-source software program libraries and frameworks are the place a lot of the magic occurs. CUDA-X libraries speed up workloads throughout each business and utility — engineering, finance, knowledge analytics, genomics, biology, chemistry, telecommunications, robotics and rather more.

“The world has a large funding in non-AI software program. From knowledge processing to science and engineering simulations, representing a whole bunch of billions of {dollars} in compute cloud computing spend annually,” Huang stated on NVIDIA’s latest incomes name.

Many functions that when ran completely on CPUs are actually quickly shifting to CUDA GPUs. “Accelerated computing has reached a tipping level. AI has additionally reached a tipping level and is remodeling current functions whereas enabling fully new ones,” he stated.

What started as an vitality?effectivity crucial has matured right into a scientific platform: simulation and AI fused at scale. The management of NVIDIA GPUs within the TOP100 is each proof of this trajectory and a sign of what comes subsequent — breakthroughs throughout each self-discipline.

Consequently, researchers can now practice trillion?parameter fashions, simulate fusion reactors and speed up drug discovery at scales CPUs alone may by no means attain.

The Three Scaling Legal guidelines Driving AI’s Subsequent Frontier ?

The change from CPUs to GPUs is not only a milestone in supercomputing. It’s the inspiration for the three scaling legal guidelines that symbolize the roadmap for AI’s subsequent workflow: pretraining, submit?coaching and take a look at?time scaling.

Pre?coaching scaling was the primary regulation to help the business. Researchers found that as datasets, parameter counts and compute grew, mannequin efficiency improved predictably. Doubling the information or parameters meant leaps in accuracy and flexibility.

On the most recent MLPerf Coaching business benchmarks, the NVIDIA platform delivered the very best efficiency on each take a look at and was the one platform to submit on all checks. With out GPUs, the “larger is best” period of AI analysis would have stalled underneath the burden of energy budgets and time constraints.

Put up?coaching scaling extends the story. As soon as a basis mannequin is constructed, it should be refined — tuned for industries, languages or security constraints. Strategies like reinforcement studying from human suggestions, pruning and distillation require huge further compute. In some instances, the calls for rival pre?coaching itself. This is sort of a scholar bettering after fundamental training. GPUs once more present the horsepower, enabling continuous wonderful?tuning and adaptation throughout domains.

Check?time scaling, the most recent regulation, might show probably the most transformative. Trendy fashions powered by mixture-of-experts architectures can motive, plan and consider a number of options in actual time. Chain?of?thought reasoning, generative search and agentic AI demand dynamic, recursive compute — typically exceeding pretraining necessities. This stage will drive exponential demand for inference infrastructure — from knowledge facilities to edge units.

Collectively, these three legal guidelines clarify the demand for GPUs for brand spanking new AI workloads. Pretraining scaling has made GPUs indispensable. Put up?coaching scaling has bolstered their position in refinement. Check?time scaling is making certain GPUs stay vital lengthy after coaching ends. That is the subsequent chapter in accelerated computing: a lifecycle the place GPUs energy each stage of AI — from studying to reasoning to deployment.

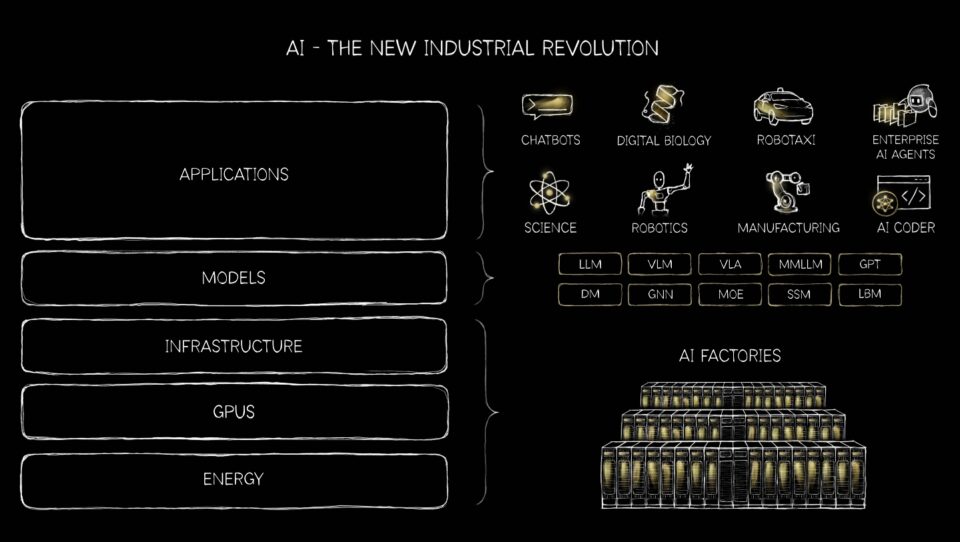

Generative, Agentic, Bodily AI and Past ?

The world of AI is increasing far past fundamental recommenders, chatbots and textual content technology. VLMs, or imaginative and prescient language fashions, are AI methods combining laptop imaginative and prescient and pure language processing for understanding and decoding photographs and textual content. And recommender methods — the engines behind personalised purchasing, streaming and social feeds — are however one in every of many examples of how the huge transition from CPUs to GPUs is reshaping AI.

In the meantime, generative AI is remodeling the whole lot from robotics and autonomous automobiles to software-as-a-service firms and represents a large funding in startups.

NVIDIA platforms are the one to run on all the main generative AI fashions and deal with 1.4 million open-source fashions.

As soon as constrained by CPU architectures, recommender methods struggled to seize the complexity of person conduct at scale. With CUDA GPUs, pretraining scaling permits fashions to study from huge datasets of clicks, purchases and preferences, uncovering richer patterns. Put up?coaching scaling wonderful?tunes these fashions for particular domains, sharpening personalization for industries from retail to leisure. On main world on-line websites, even a 1% acquire in relevance accuracy of suggestions can yield billions extra in gross sales.

Digital commerce gross sales are anticipated to succeed in $6.4 trillion worldwide for 2025, in accordance with Emarketer.

The world’s hyperscalers, a trillion-dollar business, are remodeling search, suggestions and content material understanding from classical machine studying to generative AI. NVIDIA CUDA excels at each and is the perfect platform for this transition driving infrastructure funding measured in a whole bunch of billions of {dollars}.

Now, take a look at?time scaling is remodeling inference itself: recommender engines can motive dynamically, evaluating a number of choices in actual time to ship context?conscious options. The result’s a leap in precision and relevance — suggestions that really feel much less like static lists and extra like clever steering. GPUs and scaling legal guidelines are turning advice from a background function right into a frontline functionality of agentic AI, enabling billions of individuals to kind via trillions of issues on the web with an ease that might in any other case be unfeasible.

What started as conversational interfaces powered by LLMs is now evolving into clever, autonomous methods poised to reshape almost each sector of the worldwide economic system.

We’re experiencing a foundational shift — from AI as a digital know-how to AI coming into the bodily world. This transformation calls for nothing lower than explosive development in computing infrastructure and new types of collaboration between people and machines.

Generative AI has confirmed able to not simply creating new textual content and pictures, however code, designs and even scientific hypotheses. Now, agentic AI is arriving — methods that understand, motive, plan and act autonomously. These brokers behave much less like instruments and extra like digital colleagues, finishing up advanced, multistep duties throughout industries. From authorized analysis to logistics, agentic AI guarantees to speed up productiveness by serving as autonomous digital staff.

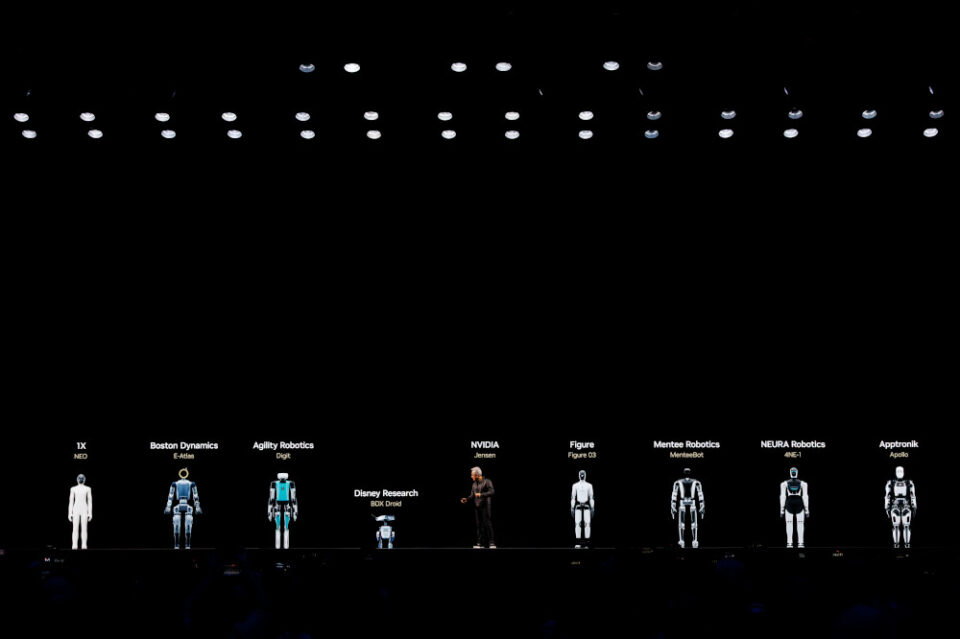

Maybe probably the most transformative leap is bodily AI — the embodiment of intelligence in robots of each kind. Three computer systems are required to construct bodily AI-embodied robots — NVIDIA DGX GB300 to coach the reasoning vision-language motion mannequin, NVIDIA RTX PRO to simulate, take a look at and validate the mannequin in a digital world constructed on Omniverse, and Jetson Thor to run the reasoning VLA at real-time velocity.

What’s anticipated subsequent is a breakthrough second for robotics inside years, with autonomous cellular robots, collaborative robots and humanoids disrupting manufacturing, logistics and healthcare. Morgan Stanley estimates there can be 1 billion humanoid robots with $5 trillion in income by 2050.

Signaling how deeply AI will embed into the bodily economic system, that’s only a sip of what’s on faucet.

AI is not only a instrument. It performs work and stands to rework each one of many world’s $100 trillion in markets. And a virtuous cycle of AI has arrived, basically altering the complete computing stack, transitioning all computer systems into new supercomputing platforms for vastly bigger alternatives.?