OpenAI and NVIDIA simply introduced a landmark AI infrastructure partnership — an initiative that may scale OpenAI’s compute with multi-gigawatt knowledge facilities powered by hundreds of thousands of NVIDIA GPUs.

To debate what this implies for the following technology of AI improvement and deployment, the 2 corporations’ CEOs, and the president of OpenAI, spoke this morning with CNBC’s Jon Fortt.

“That is the most important AI infrastructure challenge in historical past,” mentioned NVIDIA founder and CEO Jensen Huang within the interview. “This partnership is about constructing an AI infrastructure that allows AI to go from the labs into the world.”

By way of the partnership, OpenAI will deploy not less than 10 gigawatts of NVIDIA methods for OpenAI’s next-generation AI infrastructure, together with the NVIDIA Vera Rubin platform. NVIDIA additionally intends to speculate as much as $100 billion in OpenAI progressively as every gigawatt is deployed.

“There’s no accomplice however NVIDIA that may do that at this type of scale, at this type of pace,” mentioned Sam Altman, CEO of OpenAI.

The million-GPU AI factories constructed by this settlement will assist OpenAI meet the coaching and inference calls for of its subsequent frontier of AI fashions.

“Constructing this infrastructure is vital to every little thing we need to do,” Altman mentioned. “That is the gasoline that we have to drive enchancment, drive higher fashions, drive income, drive every little thing.”

Constructing Million-GPU Infrastructure to Meet AI Demand

For the reason that launch of OpenAI’s ChatGPT — which in 2022 grew to become the quickest software in historical past to achieve 100 million customers — the corporate has grown its consumer base to greater than 700 million weekly lively customers and delivered more and more superior capabilities, together with help for agentic AI, AI reasoning, multimodal knowledge and longer context home windows.

To help its subsequent section of development, the corporate’s AI infrastructure should scale as much as meet not solely coaching however inference calls for of probably the most superior fashions for agentic and reasoning AI customers worldwide.

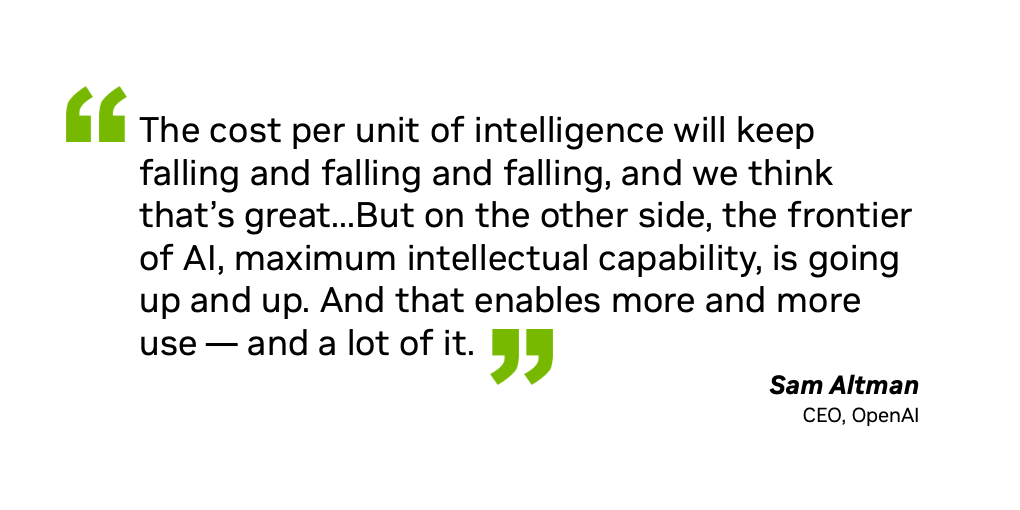

“The associated fee per unit of intelligence will hold falling and falling and falling, and we predict that’s nice,” mentioned Altman. “However on the opposite facet, the frontier of AI, most mental functionality, goes up and up. And that allows increasingly use — and a whole lot of it.”

With out sufficient computational sources, Altman defined, individuals must select between impactful use circumstances, for instance both researching a most cancers remedy or providing free training.

“Nobody desires to make that selection,” he mentioned. “And so more and more, as we see this, the reply is simply way more capability in order that we are able to serve the huge want and alternative.”

The primary gigawatt of NVIDIA methods constructed with NVIDIA Vera Rubin GPUs will generate their first tokens within the second half of 2026.

The partnership expands on a long-standing collaboration between NVIDIA and OpenAI, which started with Huang hand-delivering the primary NVIDIA DGX system to the corporate in 2016.

“This can be a billion instances extra computational energy than that preliminary server,” mentioned Greg Brockman, president of OpenAI. “We’re capable of truly create new breakthroughs, new fashions…to empower each particular person and enterprise as a result of we’ll be capable of attain the following degree of scale.”

Huang emphasised that although that is the beginning of a large buildout of AI infrastructure all over the world, it’s just the start.

“We’re actually going to attach intelligence to each software, to each use case, to each system — and we’re simply at the start,” Huang mentioned. “That is the primary 10 gigawatts, I guarantee you of that.”

Watch the CNBC interview.