Open-source AI is accelerating innovation throughout industries, and NVIDIA DGX Spark and DGX Station are constructed to assist builders flip innovation into influence.

NVIDIA at this time unveiled on the CES commerce present how the DGX Spark and DGX Station deskside AI supercomputers let builders harness the most recent open and frontier AI fashions on a neighborhood deskside system, from 100-billion-parameter fashions on DGX Spark to 1-trillion-parameter fashions on DGX Station.

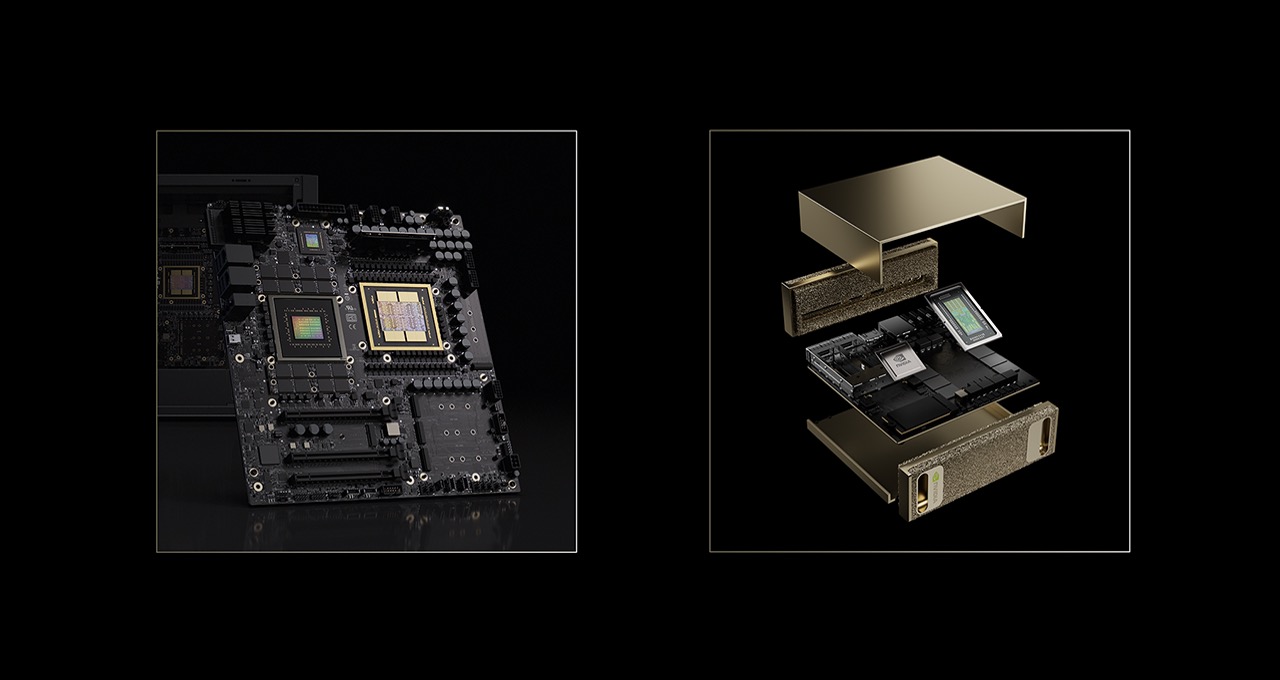

Powered by the NVIDIA Grace Blackwell structure, with giant unified reminiscence and petaflop-level AI efficiency, these techniques give builders new capabilities to develop regionally and simply scale to the cloud.

Advancing Efficiency Throughout Open-Supply AI Fashions

A breadth of extremely optimized open fashions that may’ve beforehand required an information heart to run can now be accelerated on the desktop on DGX Spark and DGX Station, because of continuous developments in mannequin optimization and collaborations with the open-source neighborhood.

Preconfigured with NVIDIA AI software program and NVIDIA CUDA-X libraries, DGX Spark gives highly effective, plug-and-play optimization for builders, researchers and knowledge scientists to construct, fine-tune and run AI.

Spark gives a basis for all builders to run the most recent AI fashions at their desk; Station permits enterprises and analysis labs to run extra superior, large-scale frontier AI fashions. The techniques help working the most recent frameworks and open-source fashions — together with the just lately introduced NVIDIA Nemotron 3 fashions — proper from desktops.

The NVIDIA Blackwell structure powering DGX Spark contains the NVFP4 knowledge format, which permits AI fashions to be compressed by as much as 70% and boosts efficiency with out dropping intelligence.

NVIDIA’s collaborations with the open-source software program ecosystem, akin to its work with llama.cpp, is pushing efficiency additional, delivering a 35% efficiency uplift on common when working state-of-the-art AI fashions on DGX Spark. Llama.cpp additionally features a quality-of-life improve that hastens LLM loading instances.

DGX Station, with the GB300 Grace Blackwell Extremely superchip and 775GB of coherent reminiscence with FP4 precision, can run fashions as much as 1 trillion parameters — giving frontier AI labs cutting-edge compute functionality for large-scale fashions from the desktop. This contains a wide range of superior AI fashions together with Kimi-K2 Pondering, DeepSeek-V3.2, Mistral Giant 3, Meta Llama 4 Maverick, Qwen3 and OpenAI gpt-oss-120b.

“NVIDIA GB300 is usually deployed as a rack-scale system,” stated Kaichao You, core maintainer of vLLM. “This makes it tough for initiatives like vLLM to check and develop straight on the highly effective GB300 superchip. DGX Station modifications this dynamic. By delivering GB300 in a compact, single-system kind issue deskside, DGX Station permits vLLM to check and develop GB300-specific options at a considerably decrease value. This accelerates growth cycles and makes it simple for vLLM to constantly validate and optimize towards GB300.”

“DGX Station brings data-center-class GPU functionality straight into my room,” stated Jerry Zhou, neighborhood contributor to SGLang. “It’s highly effective sufficient to serve very giant fashions like Qwen3-235B, take a look at coaching frameworks with giant mannequin configurations and develop CUDA kernels with extraordinarily giant matrix sizes, all regionally with out counting on cloud racks. This dramatically shortens the iteration loop for techniques and framework growth.”

NVIDIA will probably be showcasing the capabilities of DGX Station dwell at CES, demonstrating:

- LLM pretraining that strikes at a blistering 250,000 tokens per second.

- A big knowledge visualization of thousands and thousands of information factors in class clusters. The subject modeling workflow makes use of machine studying strategies and algorithms accelerated by the NVIDIA cuML library.

- Visualizing large data databases with excessive accuracy utilizing Textual content to Data Graph and Flame 3.3 Nemotron Tremendous 49B.

Increasing AI and Creator Workflows

DGX Spark and Station are purpose-built to help the complete AI growth lifecycle, from prototyping and fine-tuning to inference and knowledge science, for a variety of industry-specific AI functions in healthcare, robotics, retail, artistic workflows and extra.

For creators, the most recent diffusion and video era fashions, together with Black Forest Labs’ FLUX.2 and FLUX.1, and Alibaba’s Qwen-Picture, now help NVFP4, lowering reminiscence footprint and accelerating efficiency. And the brand new Lightricks’ LTX-2 video mannequin is now accessible for obtain, together with NVFP8 quantized checkpoints for NVIDIA GPUs, delivering high quality on par with the highest cloud fashions.

Reside CES demonstrations spotlight how DGX Spark can offload demanding video era workloads from creator laptops, delivering 8x acceleration in contrast with a top-of-the-line MacBook Professional with M4 Max, releasing native techniques for uninterrupted artistic work.

The open-source RTX Remix modding platform is predicted to quickly empower 3D artists and modders to make use of DGX Spark to create quicker with generative AI. Further CES demonstrations showcase how a mod workforce can offload all of their asset creation to DGX Spark, releasing up their PCs to mod with out pauses and enabling them to view in-game modifications in actual time.

AI coding assistants are additionally remodeling developer productiveness. At CES, NVIDIA is demonstrating a neighborhood CUDA coding assistant powered by NVIDIA Nsight on DGX Spark, which permits builders to maintain supply code native and safe whereas benefiting from AI-assisted enterprise growth.

Trade Leaders Validate the Shift to Native AI

As demand grows for safe, high-performance AI on the edge, DGX Spark is gaining momentum throughout the {industry}.

Software program leaders, open-source innovators and international workstation companions are adopting DGX Spark to energy native inference, agentic workflows and retrieval-augmented era with out the complexity of centralized infrastructure.

Their views underscore how DGX Spark is enabling quicker iteration, better management over knowledge and IP, and new, extra interactive AI experiences on the desktop.

At CES, NVIDIA is demonstrating how you can use the processing energy of DGX Spark with the Hugging Face Reachy Mini robotic to carry AI brokers into the true world.

“Open fashions give builders the liberty to construct AI their means, and DGX Spark brings that energy straight to the desktop,” stated Jeff Boudier, vp of product at Hugging Face. “If you join it to Reachy Mini, your native AI brokers develop into embodied and acquire a voice of their very own. They will see you, hearken to you and reply with expressive movement — turning highly effective AI into one thing you’ll be able to really work together with.”

Hugging Face and NVIDIA have launched a step-by-step information to constructing an interactive AI agent utilizing DGX Spark and Reachy Mini.?

“DGX Spark brings AI inference to the sting,” stated Ed Anuff, vp of information and AI platform technique at IBM. “With OpenRAG on Spark, customers get a whole, self-contained RAG stack in a field — extraction, embedding, retrieval and inference.”

“For organizations that want full management over safety, governance and mental property, NVIDIA DGX Spark brings petaflop-class AI efficiency to JetBrains clients,” stated Kirill Skrygan, CEO of JetBrains. “Whether or not the shoppers choose cloud, on-premises or hybrid deployments, JetBrains AI is designed to fulfill them the place they’re.”

TRINITY, an clever, self-balancing, three-wheeled single-passenger car designed for city transportation, will probably be on show at CES, utilizing DGX Spark because the AI-powered mind for AI inference of open-source, real-time imaginative and prescient language mannequin workloads.

“TRINITY represents the way forward for micromobility — the place people, automobiles, and AI brokers work collectively seamlessly,” stated will.i.am. “With NVIDIA DGX Spark as its AI mind, TRINITY delivers conversational, goal-tracking workflows that rework how folks work together with mobility in linked cities. It’s brains on wheels, designed from the agent up.”

Accelerating AI Developer Adoption

DGX Spark playbooks assist builders quickly get began with real-world AI initiatives. At CES, NVIDIA is increasing this library with six new playbooks and 4 main updates, spanning subjects akin to the most recent NVIDIA Nemotron 3 Nano mannequin, robotics coaching, imaginative and prescient language fashions, fine-tuning AI fashions utilizing two DGX Spark techniques, genomics and monetary evaluation.

As DGX Station turns into accessible later this yr, extra playbooks will probably be added for builders to get began with NVIDIA GB300 techniques.

NVIDIA AI Enterprise software program help is now accessible for DGX Spark and GB10 techniques from producer companions. Together with libraries, frameworks and microservices for AI software growth and mannequin set up, in addition to operators and drivers for GPU optimization, NVIDIA AI Enterprise permits quick and dependable AI engineering and deployment. Licenses are anticipated to be accessible on the finish of January.

Availability

DGX Spark and producer accomplice GB10 techniques can be found from Acer, Amazon, ASUS, Dell Applied sciences, GIGABYTE, HP Inc., Lenovo, Micro Heart, MSI and PNY.

DGX Station will probably be accessible from ASUSBoxx, Dell Applied sciences, GIGABYTE, HP Inc., MSI and Supermicro beginning in spring 2026.

Dive deeper into DGX Spark on this technical weblog.

See discover concerning software program product data.