AI-powered content material technology is now embedded in on a regular basis instruments like Adobe and Canva, with a slew of companies and studios incorporating the expertise into their workflows. Picture fashions now ship photorealistic outcomes constantly, video fashions can generate lengthy and coherent clips, and each can comply with inventive instructions.

Creators are more and more working these workflows domestically on PCs to maintain belongings underneath direct management, take away cloud service prices and get rid of the friction of iteration — making it simpler to refine outputs on the tempo actual inventive tasks demand.

Since their inception, NVIDIA RTX PCs have been the system of selection for working inventive AI attributable to their excessive efficiency — lowering iteration time — and the truth that customers can run fashions on them at no cost, eradicating token nervousness.

With latest RTX optimizations and new open-weight fashions launched at CES earlier this month, creatives can work quicker, extra effectively and with far higher inventive management.

The best way to Get Began

Getting began with visible generative AI can really feel advanced and limiting. On-line AI turbines are straightforward to make use of however supply restricted management.

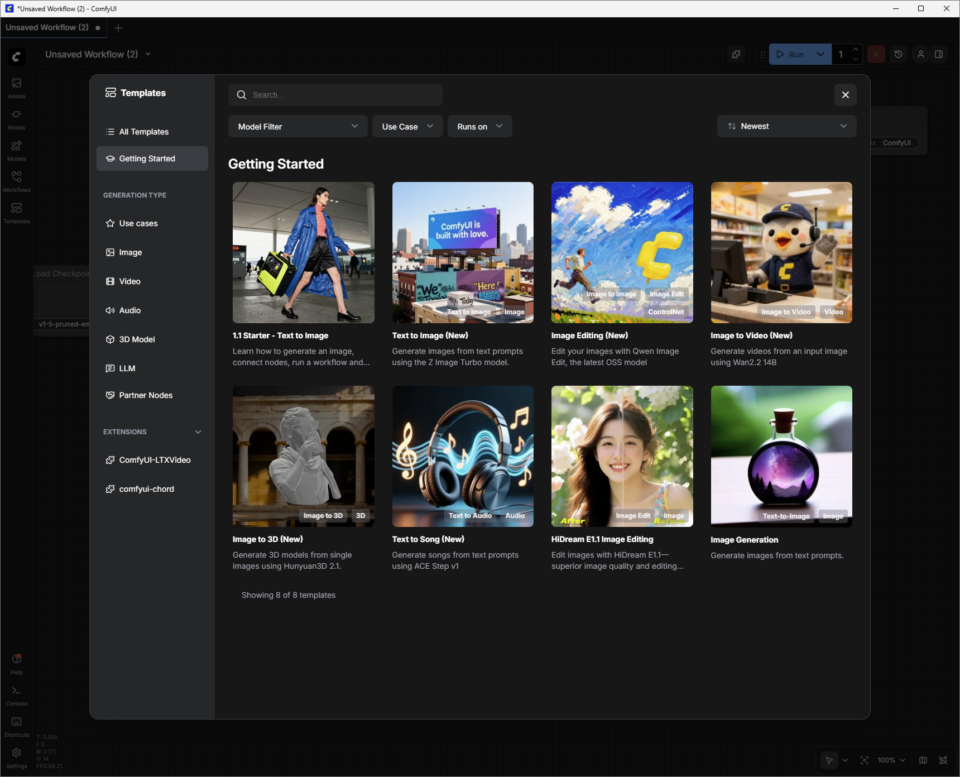

Open supply group instruments like ComfyUI simplify organising superior inventive workflows and are straightforward to put in. In addition they present a straightforward method to obtain the newest and biggest fashions, similar to FLUX.2 and LTX-2, in addition to prime group workflows.

Right here’s tips on how to get began with visible generative AI domestically on RTX PCs utilizing ComfyUI and standard fashions:

- Go to comfortable.org to obtain and set up ComfyUI for Home windows.

- Launch ComfyUI.

- Create an preliminary picture utilizing the starter template:

-

- Click on on the “Templates” button, then on “Getting Began” and select “1.1 Starter – Textual content to Picture.”

- Join the mannequin “Node” to the “Save Picture Node.” The nodes work in a pipeline to generate content material utilizing AI.

- Press the blue “Run” button and watch the inexperienced “Node” spotlight because the RTX-powered PC generates its first picture.

Change the immediate and run it once more to enter extra deeply into the inventive world of visible generative AI.

Learn extra beneath on tips on how to dive into further ComfyUI templates that use extra superior picture and video fashions.

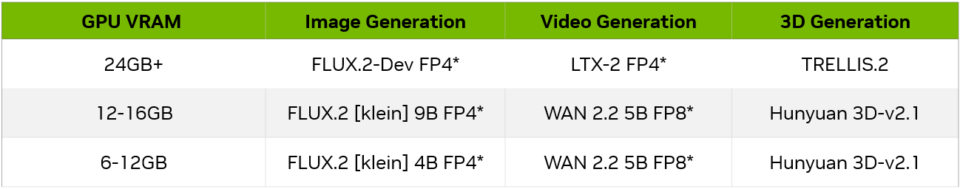

Mannequin Sizes and GPUs

As customers get extra acquainted with ComfyUI and the fashions that assist it, they’ll want to contemplate GPU VRAM capability and whether or not a mannequin will match inside it. Listed below are some examples for getting began, relying on GPU VRAM:

Producing Photos

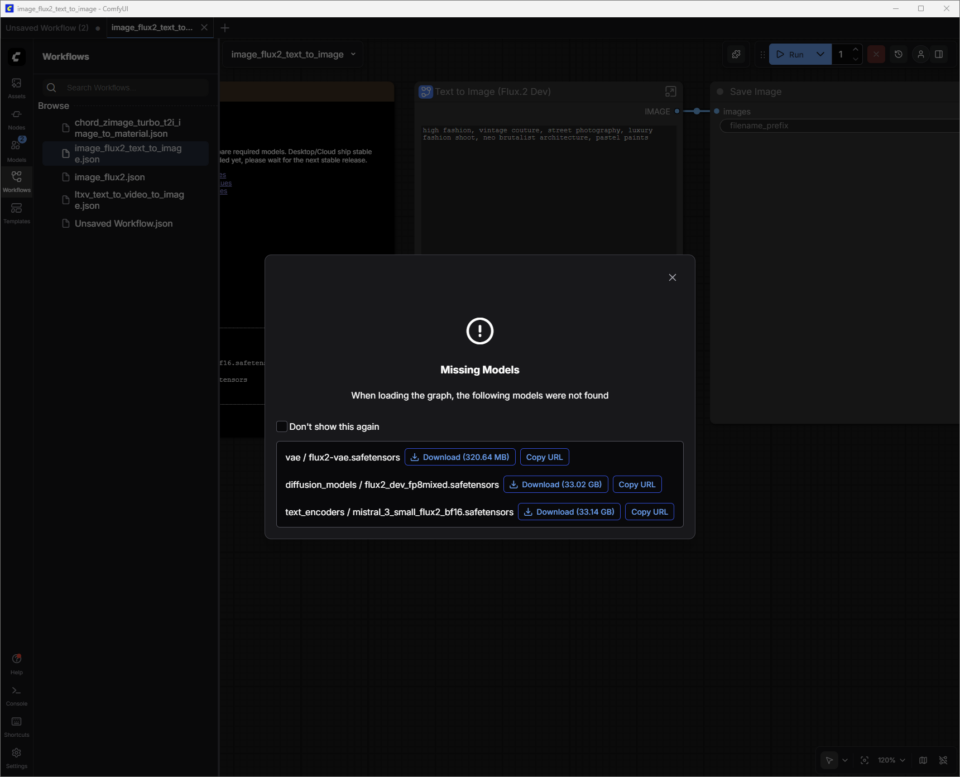

To discover tips on how to enhance picture technology high quality utilizing FLUX.2-Dev:

From the ComfyUI “Templates” part, click on on “All Templates” and seek for “FLUX.2 Dev Textual content to Picture.” Choose it, and ComfyUI will load the gathering of linked nodes, or “Workflow.”

FLUX.2-Dev has mannequin weights that may must be downloaded.

Mannequin weights are the “data” inside an AI mannequin — consider them just like the synapses in a mind. When a picture technology mannequin like FLUX.2 was skilled, it realized patterns from hundreds of thousands of pictures. These patterns are saved as billions of numerical values known as “weights.”

ComfyUI doesn’t include these weights inbuilt. As an alternative, it downloads them on demand from repositories like Hugging Face. These recordsdata are giant (FLUX.2 could be >30GB relying on the model), which is why techniques want sufficient storage and obtain time to seize them.

A dialog will seem to information customers by way of downloading the mannequin weights. The burden recordsdata (filename.safetensors) are routinely saved to the right ComfyUI folder on a person’s PC.

Saving Workflows:

Now that the mannequin weights are downloaded, the subsequent step is to avoid wasting this newly downloaded template as a “Workflow.”

Customers can click on on the top-left hamburger menu (three strains) and select “Save.” The workflow is now saved within the person’s checklist of “Workflows” (press W to point out or conceal the window). Shut the tab to exit the workflow with out dropping any work.

If the obtain dialog was unintentionally closed earlier than the mannequin weights completed downloading:

- Press W to rapidly open the “Workflows” window.

- Choose the Workflow and ComfyUI will load it. This may also immediate for any lacking mannequin weights to obtain.

- ComfyUI is now able to generate a picture utilizing FLUX.2-Dev.

Immediate Ideas for FLUX.2-Dev:

- Begin with clear, concrete descriptions of the topic, setting, model and temper — for instance: “Cinematic closeup of a classic race automotive within the rain, neon reflections on moist asphalt, excessive distinction, 35mm pictures.” Brief?to?medium size prompts — a single, targeted sentence or two — are often simpler to regulate than lengthy, storylike prompts, particularly when getting began.

- Add constraints to information consistency and high quality. Specify issues like:

- Framing (“extensive shot” or “portrait”)

- Element degree (“excessive element, sharp focus”)

- Realism (“photorealistic” or “stylized illustration”)

- If outcomes are too busy, take away adjectives as an alternative of including extra.

- Keep away from unfavorable prompting — keep on with prompting what’s desired.

Study extra about FLUX.2 prompting in this information from Black Forest Labs.

Save Places on Disk:

As soon as carried out refining the picture, proper click on on “Save Picture Node” to open the picture in a browser, or put it aside in a brand new location.

ComfyUI’s default output folders are sometimes the next, based mostly on the applying kind and OS:

- Home windows (Standalone/Moveable Model): The folder is often present in C:ComfyUIoutput or an analogous path inside the place this system was unzipped.

- Home windows (Desktop Utility): The trail is often situated throughout the AppData listing, like: C:UserspercentusernamepercentAppDataLocalPrograms@comfyorgcomfyui-electronresourcesComfyUIoutput

- Linux: The set up location defaults to ~/.config/ComfyUI.

Prompting Movies

Discover tips on how to enhance video technology high quality, utilizing the brand new LTX-2 mannequin for example:

Lightrick’s LTX?2 is a complicated audio-video mannequin designed for controllable, storyboard-style video technology in ComfyUI. As soon as the LTX?2 Picture to Video Template and mannequin weights are downloaded, begin by treating the immediate like a brief shot description, moderately than a full film script.

In contrast to the primary two Templates, LTX?2 Picture to Video combines a picture and a textual content immediate to generate video.

Customers can take one of many pictures generated in FLUX.2-Dev and add a textual content immediate to offer it life.

Immediate Ideas for LTX?2:

For finest ends in ComfyUI, write a single flowing paragraph within the current tense or use a easy, script?model format with scene headings (sluglines), motion, character names and dialogue. Purpose for 4 to 6 descriptive sentences that cowl all the important thing features:

- Set up the shot and scene (extensive/medium/closeup, lighting, coloration, textures, ambiance).

- Describe the motion as a transparent sequence, outline characters with seen traits and physique language, and specify digital camera strikes.

- Lastly, add audio, similar to ambient sound, music and dialogue, utilizing citation marks.

- Match the extent of element to the shot scale. For instance, closeups want extra exact character and texture element than extensive pictures. Be clear on how the digital camera pertains to the topic, not simply the place it strikes.

Further particulars to contemplate including to prompts:

- Digital camera motion language: Specify instructions like “gradual dolly in,” “handheld monitoring,” “over?the?shoulder shot,” “pans throughout,” “tilts upward,” “pushes in,” “pulls again” or “static body.”

- Shot varieties: Specify extensive, medium or shut?ups with considerate lighting, shallow depth of area and pure movement.

- Pacing: Direct for gradual movement, time?lapses, lingering pictures, steady pictures, freeze frames or seamless transitions that form rhythm and tone.

- Ambiance: Add particulars like fog, mist, rain, golden hour gentle, reflections and wealthy floor textures that floor the scene.

- Model: Early within the immediate, specify kinds like painterly, movie noir, analog movie, cease?movement, pixelated edges, style editorial or surreal.

- Lighting: Direct backlighting, particular coloration palettes, comfortable rim gentle, lens flares or different lighting particulars utilizing particular language.

- Feelings: Give attention to prompting for single?topic performances with clear facial expressions and small gestures.

- Voice and audio: Immediate characters to talk or sing in numerous languages, supported by clear ambient sound descriptions.

Optimizing VRAM Utilization and Picture High quality

As a frontier mannequin, LTX-2 makes use of important quantities of video reminiscence (VRAM) to ship high quality outcomes. Reminiscence use goes up as decision, body charges, size or steps enhance.

ComfyUI and NVIDIA have collaborated to optimize a weight streaming function that permits customers to dump elements of the workflow to system reminiscence if their GPU runs out of VRAM — however this comes at a price in efficiency.

Relying on the GPU and use case, customers might wish to constrain these elements to make sure affordable technology occasions.

LTX-2 is an extremely superior mannequin — however as with every mannequin, tweaking the settings has a huge impact on high quality.

Study extra about optimizing LTX-2 utilization with RTX GPUs within the Fast Begin Information for LTX-2 In ComfyUI.

Constructing a Customized Workflow With FLUX.2-Dev and LTX-2

Customers can simplify the method of hopping between ComfyUI Workflows with FLUX.2-Dev to generate a picture, discovering it on disk and including it as a picture immediate to the LTX-2 Picture to Video Workflow by combining the fashions into a brand new workflow:

- Open the saved FLUX.2-Dev Textual content to Picture Workflow.

- Ctrl+left mouse click on the FLUX.2-Dev Textual content to Picture node.

- Within the LTX-2 Picture to Video Workflow, paste the node utilizing Ctrl+V.

- Merely hover over the FLUX.2-Dev Textual content to Picture node IMAGE dot, left click on and drag to the Resize Picture/Masks Enter dot. A blue connector will seem.

Save with a brand new title, and textual content immediate for picture and video in a single workflow.

Superior 3D Technology

Past producing pictures with FLUX.2 and movies with LTX?2, the subsequent step is including 3D steering. The NVIDIA Blueprint for 3D-guided generative AI exhibits tips on how to use 3D scenes and belongings to drive extra controllable, production-style picture and video pipelines on RTX PCs — with ready-made workflows customers can examine, tweak and prolong.

Creators can exhibit their work, join with different customers and discover assistance on the Steady Diffusion subreddit and ComfyUI Discord.

#ICYMI — The Newest Developments in NVIDIA RTX AI PCs

?NVIDIA @ CES 2026

CES bulletins included 4K AI video technology acceleration on PCs with LTX-2 and ComfyUI upgrades. Plus, main RTX accelerations throughout ComfyUI, LTX-2, Llama.cpp, Ollama, Hyperlink and extra unlock video, picture and textual content technology use instances on AI PCs.

? Black Forest Labs FLUX 2 Variants

FLUX.2 (klein) is a set of compact, ultrafast fashions that assist each picture technology and modifying, delivering state-of-the-art picture high quality. The fashions are accelerated by NVFP4 and NVFP8, boosting velocity by as much as 2.5x and enabling them to run performantly throughout a variety of RTX GPUs.

?Venture G-Help Replace

With a brand new “Reasoning Mode” enabled by default, Venture G-Help beneficial properties an accuracy and intelligence increase, in addition to the flexibility to motion a number of instructions directly. G-Help can now management settings on G-SYNC screens, CORSAIR peripherals and CORSAIR PC parts by way of iCUE — overlaying lighting, profiles, efficiency and cooling.

Help can also be coming quickly to Elgato Stream Decks, bringing G-Help nearer to a unified AI interface for tuning and controlling almost any system. For G-Help plug-in devs, a brand new Cursor-based plug-in builder accelerates growth utilizing Cursor’s agentic coding atmosphere.

Plug in to NVIDIA AI PC on Fb, Instagram, TikTok and X — and keep knowledgeable by subscribing to the RTX AI PC publication.

Comply with NVIDIA Workstation on LinkedIn and X.

See discover relating to software program product info.