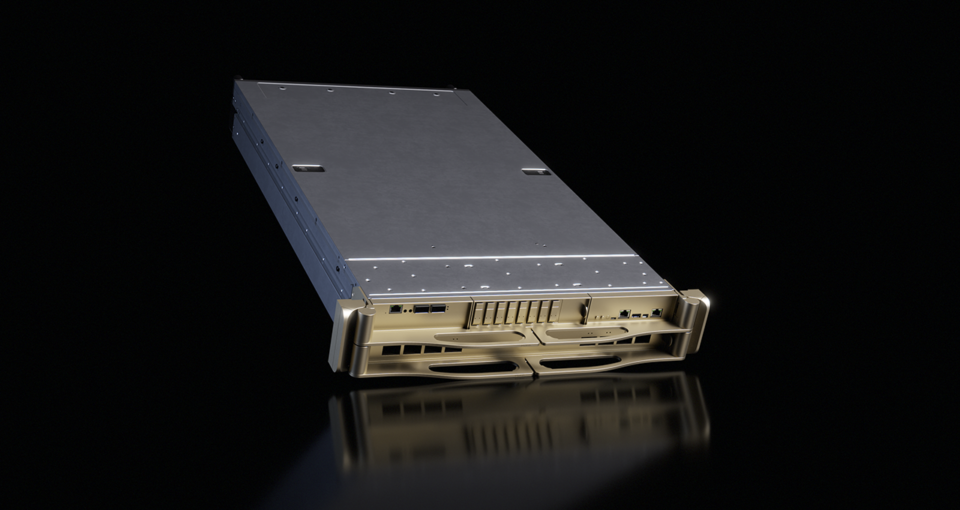

NVIDIA DGX SuperPOD is paving the best way for large-scale system deployments constructed on the NVIDIA Rubin platform — the subsequent leap ahead in AI computing.

On the CES commerce present in Las Vegas, NVIDIA at this time launched the Rubin platform, comprising six new chips designed to ship one unbelievable AI supercomputer, and engineered to speed up agentic AI, combination?of?specialists (MoE) fashions and lengthy?context reasoning.

The Rubin platform unites six chips — the NVIDIA Vera CPURubin GPU, NVLink 6 SwapConnectX-9 SuperNIC, BlueField-4 DPU and Spectrum-6 Ethernet Swap — by means of a sophisticated codesign method that accelerates coaching and reduces the price of inference token era.

DGX SuperPOD stays the foundational design for deploying Rubin?based mostly techniques throughout enterprise and analysis environments.

The NVIDIA DGX platform addresses the complete know-how stack — from NVIDIA computing to networking to software program — as a single, cohesive system, eradicating the burden of infrastructure integration and permitting groups to deal with AI innovation and enterprise outcomes.

“Rubin arrives at precisely the fitting second, as AI computing demand for each coaching and inference goes by means of the roof,” stated Jensen Huang, founder and CEO of NVIDIA.

New Platform for the AI Industrial Revolution

The Rubin platform used within the new DGX techniques introduces 5 main know-how developments designed to drive a step?operate enhance in intelligence and effectivity:

- Sixth?Technology NVIDIA NVLink — 3.6TB/s per GPU and 260TB/s per Vera Rubin NVL72 rack for large MoE and lengthy?context workloads.

- NVIDIA Vera CPU — 88 NVIDIA customized Olympus cores, full Armv9.2 compatibility and ultrafast NVLink-C2C connectivity for industry-leading environment friendly AI manufacturing unit compute.

- NVIDIA Rubin GPU — 50 petaflops of NVFP4 compute for AI inference that includes a third-generation Transformer Engine with {hardware}?accelerated compression.

- Third?Technology NVIDIA Confidential Computing — Vera Rubin NVL72 is the primary rack-scale platform delivering NVIDIA Confidential Computing, which maintains information safety throughout CPU, GPU and NVLink domains.

- Second?Technology RAS Engine — Spanning GPU, CPU and NVLink, the NVIDIA Rubin platform delivers real-time well being monitoring, fault tolerance and proactive upkeep, with modular cable-free trays enabling 3x quicker servicing.

Collectively, these improvements ship as much as 10x discount in inference token value of the earlier era — a important milestone as AI fashions develop in dimension, context and reasoning depth.

DGX SuperPOD: The Blueprint for NVIDIA Rubin Scale?Out

Rubin-based DGX SuperPOD deployments will combine:

- NVIDIA DGX Vera Rubin NVL72 or DGX Rubin NVL8 techniques

- NVIDIA BlueField?4 DPUs for safe, software program?outlined infrastructure

- NVIDIA Inference Context Reminiscence Storage Platform for next-generation inference

- NVIDIA ConnectX?9 SuperNICs

- NVIDIA Quantum?X800 InfiniBand and NVIDIA Spectrum?X Ethernet

- NVIDIA Mission Management for automated AI infrastructure orchestration and operations

NVIDIA DGX SuperPOD with DGX Vera Rubin NVL72 unifies eight DGX Vera Rubin NVL72 techniquesthat includes 576 Rubin GPUs, to ship 28.8 exaflops of FP4 efficiency and 600TB of quick reminiscence. Every DGX Vera Rubin NVL72 system — combining 36 Vera CPUs, 72 Rubin GPUs and 18 BlueField?4 DPUs — allows a unified reminiscence and compute house throughout the rack. With 260TB/s of combination NVLink throughput, it eliminates the necessity for mannequin partitioning and permits the complete rack to function as a single, coherent AI engine.

NVIDIA DGX SuperPOD with DGX Rubin NVL8 techniques delivers 64 DGX Rubin NVL8 techniques that includes 512 Rubin GPUs. NVIDIA DGX Rubin NVL8 techniques carry Rubin efficiency right into a liquid-cooled kind issue with x86 CPUs to offer organizations an environment friendly on-ramp to the Rubin period for any AI venture within the develop?to?deploy pipeline. Powered by eight NVIDIA Rubin GPUs and sixth-generation NVLink, every DGX Rubin NVL8 delivers 5.5x NVFP4 FLOPS in contrast with NVIDIA Blackwell techniques.

Subsequent?Technology Networking for AI Factories

The Rubin platform redefines the information middle as a high-performance AI manufacturing unit with revolutionary networking, that includes NVIDIA Spectrum-6 Ethernet switches, NVIDIA Quantum-X800 InfiniBand switches, BlueField-4 DPUs and ConnectX-9 SuperNICs, designed to maintain the world’s most huge AI workloads. By integrating these improvements into the NVIDIA DGX SuperPOD, the Rubin platform eliminates the standard bottlenecks of scale, congestion and reliability.

Optimized Connectivity for Large-Scale Clusters

The following-generation 800Gb/s end-to-end networking suite offers two purpose-built paths for AI infrastructure, making certain peak effectivity whether or not utilizing InfiniBand or Ethernet:

- NVIDIA Quantum-X800 InfiniBand: Delivers the {industry}’s lowest latency and highest efficiency for devoted AI clusters. It makes use of Scalable Hierarchical Aggregation and Discount Protocol (SHARP v4) and adaptive routing to dump collective operations to the community.

- NVIDIA Spectrum-X Ethernet: Constructed on the Spectrum-6 Ethernet change and ConnectX-9 SuperNIC, this platform brings predictable, high-performance scale-out and scale-across connectivity to AI factories utilizing commonplace Ethernet protocols, optimized particularly for the “east-west” visitors patterns of AI workloads.

Engineering the Gigawatt AI Manufacturing facility

These improvements symbolize an excessive codesign with the Rubin platform. By mastering congestion management and efficiency isolation, NVIDIA is paving the best way for the subsequent wave of gigawatt AI factories. This holistic method ensures that as AI fashions develop in complexity, the networking material of the AI manufacturing unit stays a catalyst for pace relatively than a constraint.

NVIDIA Software program Advances AI Manufacturing facility Operations and Deployments

NVIDIA Mission Management — AI information middle operation and orchestration software program for NVIDIA Blackwell-based DGX techniques — shall be out there for Rubin-based NVIDIA DGX techniques to allow enterprises to automate the administration and operations of their infrastructure.

NVIDIA Mission Management accelerates each facet of infrastructure operations, from configuring deployments to integrating with amenities to managing clusters and workloads.

With clever, built-in software program, enterprises acquire improved management over cooling and energy occasions for NVIDIA Rubin, in addition to infrastructure resiliency. NVIDIA Mission Management allows quicker response with fast leak detection, unlocks entry to NVIDIA’s newest effectivity improvements and maximizes AI manufacturing unit productiveness with autonomous restoration.

NVIDIA DGX techniques additionally assist the NVIDIA AI Enterprise software program platform, together with NVIDIA NIM microservices, corresponding to for the NVIDIA Nemotron-3 household of open fashions, information and libraries.

DGX SuperPOD: The Street Forward for Industrial AI

DGX SuperPOD has lengthy served because the blueprint for big?scale AI infrastructure. The arrival of the Rubin platform will grow to be the launchpad for a brand new era of AI factories — techniques designed to purpose throughout 1000’s of steps and ship intelligence at dramatically decrease value, serving to organizations construct the subsequent wave of frontier fashions, multimodal techniques and agentic AI purposes.

NVIDIA DGX SuperPOD with DGX Vera Rubin NVL72 or DGX Rubin NVL8 techniques shall be out there within the second half of this 12 months.

See discover concerning software program product info.