In collaboration with OpenAI, NVIDIA has optimized the corporate’s new open-source gpt-oss fashions for NVIDIA GPUs, delivering sensible, quick inference from the cloud to the PC. These new reasoning fashions allow agentic AI purposes comparable to internet search, in-depth analysis and plenty of extra.

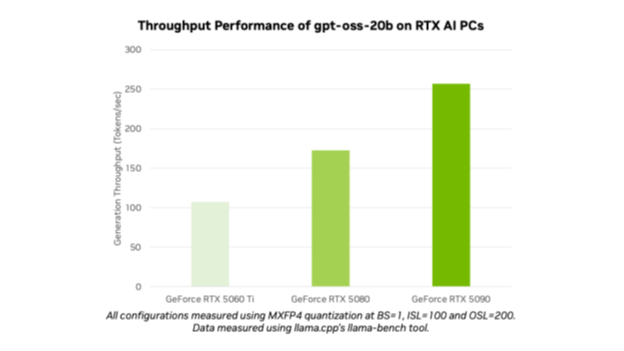

With the launch of gpt-oss-20b and gpt-oss-120b, OpenAI has opened cutting-edge fashions to thousands and thousands of customers. AI fans and builders can use the optimized fashions on NVIDIA RTX AI PCs and workstations via in style instruments and frameworks like Ollama, llama.cpp and Microsoft AI Foundry Native, and count on efficiency of as much as 256 tokens per second on the NVIDIA GeForce RTX 5090 GPU.

“OpenAI confirmed the world what may very well be constructed on NVIDIA AI — and now they’re advancing innovation in open-source software program,” mentioned Jensen Huang, founder and CEO of NVIDIA. “The gpt-oss fashions let builders all over the place construct on that state-of-the-art open-source basis, strengthening U.S. expertise management in AI — all on the world’s largest AI compute infrastructure.”

The fashions’ launch highlights NVIDIA’s AI management from coaching to inference and from cloud to AI PC.

Open for All

Each gpt-oss-20b and gpt-oss-120b are versatile, open-weight reasoning fashions with chain-of-thought capabilities and adjustable reasoning effort ranges utilizing the favored mixture-of-experts structure. The fashions are designed to help options like instruction-following and power use, and had been skilled on NVIDIA H100 GPUs.

These fashions can help as much as 131,072 context lengths, among the many longest out there in native inference. This implies the fashions can cause via context issues, best for duties comparable to internet search, coding help, doc comprehension and in-depth analysis.

The OpenAI open fashions are the primary MXFP4 fashions supported on NVIDIA RTX. MXFP4 permits for top mannequin high quality, providing quick, environment friendly efficiency whereas requiring fewer sources in contrast with different precision varieties.

Run the OpenAI Fashions on NVIDIA RTX With Ollama

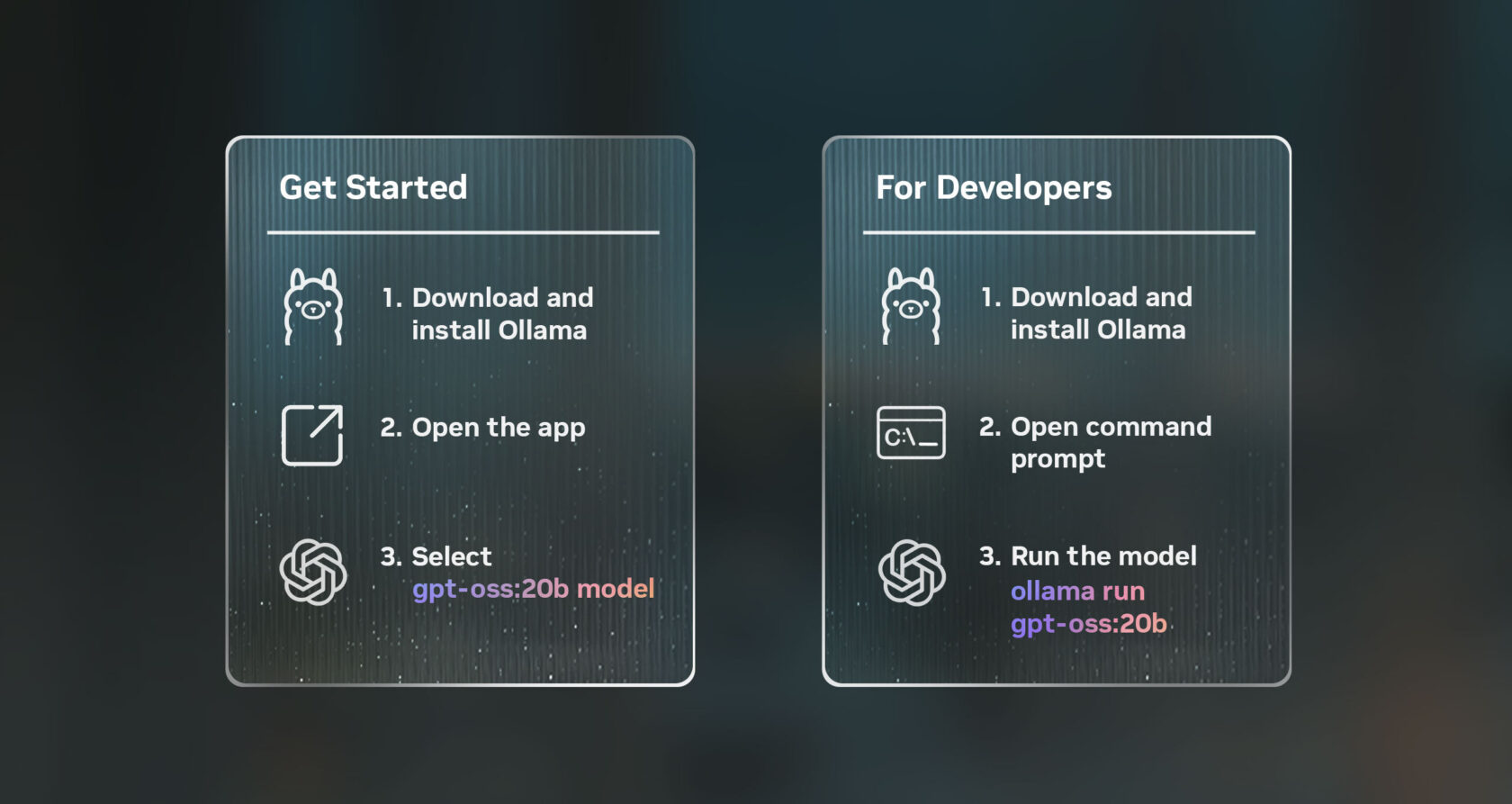

The best method to check these fashions on RTX AI PCs, on GPUs with no less than 24GB of VRAM, is utilizing the brand new Ollama app. Ollama is in style with AI fans and builders for its ease of integration, and the brand new consumer interface (UI) contains out-of-the-box help for OpenAI’s open-weight fashions. Ollama is absolutely optimized for RTX, making it best for customers seeking to expertise the ability of private AI on their PC or workstation.

As soon as put in, Ollama allows fast, straightforward chatting with the fashions. Merely choose the mannequin from the dropdown menu and ship a message. As a result of Ollama is optimized for RTX, there aren’t any extra configurations or instructions required to make sure high efficiency on supported GPUs.

Ollama’s new app contains different new options, like straightforward help for PDF or textual content information inside chats, multimodal help on relevant fashions so customers can embody photographs of their prompts, and simply customizable context lengths when working with giant paperwork or chats.

Builders also can use Ollama by way of command line interface or the app’s software program improvement equipment (SDK) to energy their purposes and workflows.

Different Methods to Use the New OpenAI Fashions on RTX

Fanatics and builders also can strive the gpt-oss fashions on RTX AI PCs via numerous different purposes and frameworks, all powered by RTX, on GPUs which have no less than 16GB of VRAM.

NVIDIA continues to collaborate with the open-source neighborhood on each llama.cpp and the GGML tensor library to optimize efficiency on RTX GPUs. Latest contributions embody implementing CUDA Graphs to cut back overhead and including algorithms that cut back CPU overheads. Try the llama.cpp GitHub repository to get began.

Home windows builders also can entry OpenAI’s new fashions by way of Microsoft AI Foundry Nativepresently in public preview. Foundry Native is an on-device AI inferencing answer that integrates into workflows by way of the command line, SDK or utility programming interfaces. Foundry Native makes use of ONNX Runtime, optimized via CUDA, with help for NVIDIA TensorRT for RTX coming quickly. Getting began is simple: set up Foundry Native and invoke “Foundry mannequin run gpt-oss-20b” in a terminal.

The discharge of those open-source fashions kicks off the subsequent wave of AI innovation from fans and builders trying so as to add reasoning to their AI-accelerated Home windows purposes.

Every week, the RTX AI Storage weblog collection options community-driven AI improvements and content material for these seeking to be taught extra about NVIDIA NIM microservices and AI Blueprints, in addition to constructing AI brokersartistic workflows, productiveness apps and extra on AI PCs and workstations.

Plug in to NVIDIA AI PC on Fb, Instagram, Tiktok and X — and keep knowledgeable by subscribing to the RTX AI PC publication. Be a part of NVIDIA’s Discord server to attach with neighborhood builders and AI fans for discussions on what’s attainable with RTX AI.

Observe NVIDIA Workstation on LinkedIn and X.

See discover relating to software program product info.